Every QA leader faces a tangled web of testing tools, each demanding custom integrations. The Model Context Protocol promises to cut through this chaos with a standardized interface between AI models and live systems. But is this the architectural breakthrough that finally unifies AI-assisted testing, or another protocol war?

Every QA leader faces the same exhausting reality: a tangled web of testing tools, each demanding its own API integration, authentication dance, and maintenance overhead. CI/CD pipelines talk to test runners through custom scripts. Defect trackers need bespoke connectors. Analytics platforms require yet another integration layer. Now multiply that complexity by AI agents that promise to automate your quality pipeline but can't access your systems without brittle, point-to-point connections that break with every vendor update.

The Model Context Protocol (MCP) promises to cut through this chaos with a single, standardized interface between AI models and live systems. But is this the architectural breakthrough that finally unifies AI-assisted testing? Or are we watching another protocol war unfold, destined to become tomorrow's technical debt? The answer determines whether QA organizations waste the next three years chasing integration mirage or seize a genuine opportunity to transform how quality works.

This article cuts through the hype to deliver a clear strategic verdict: MCP introduces a unifying layer that can revolutionize test design, execution, and analysis, but only if adoption strategies balance innovation with governance, cost, and operational maturity. Here's what engineering leaders need to know.

What is the Model Context Protocol (MCP) and why should QA leaders care?

MCP standardizes how large-language-model agents connect to external tools and data, transforming them from passive text generators into active orchestrators of quality pipelines, eliminating the integration debt that currently consumes 30-40% of QA engineering time (Anthropic MCP Documentation).

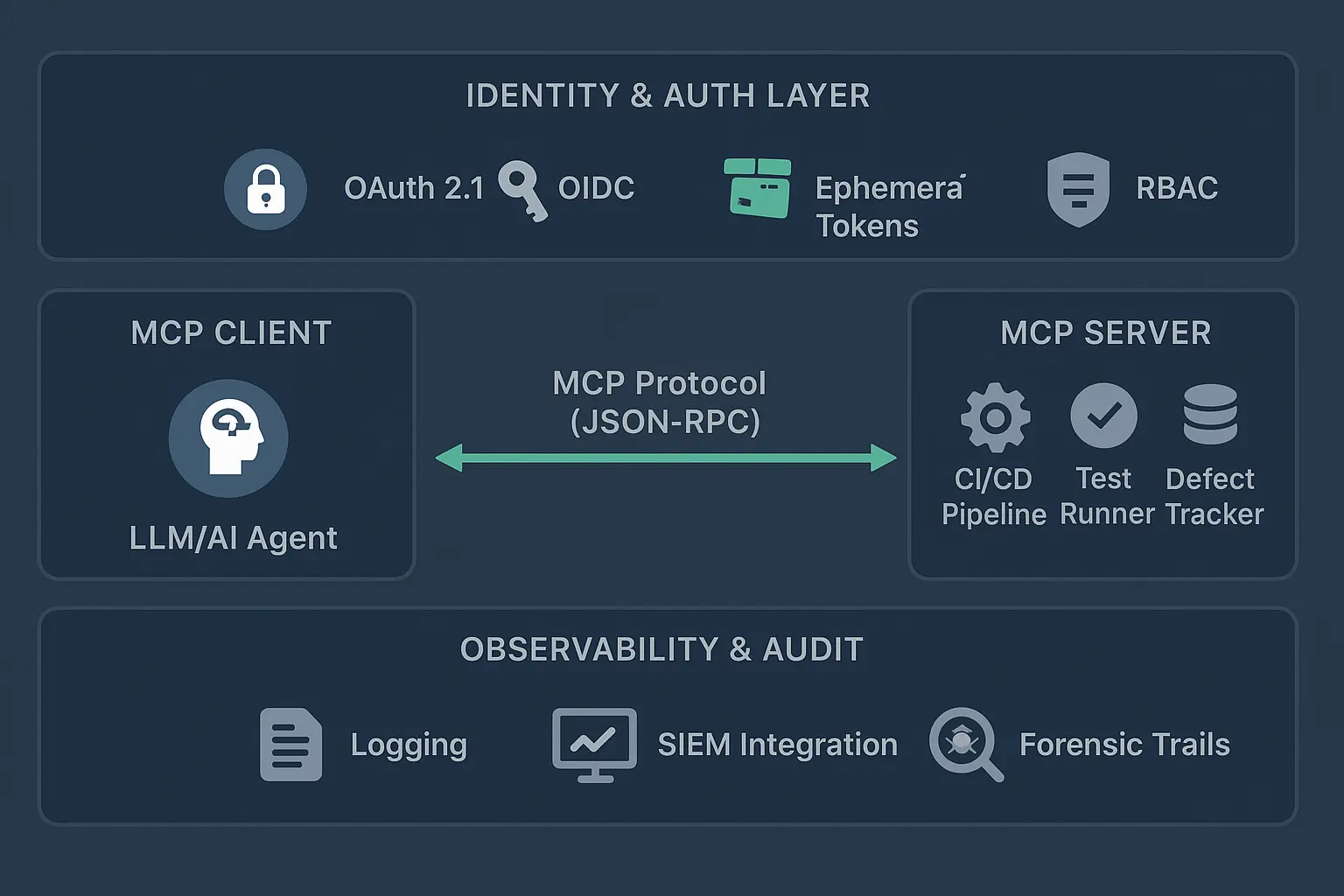

MCP exposes a server-client architecture:

The MCP server advertises "tools", e.g., APIs for test execution, CI pipelines, defect databases

The MCP client (often an LLM or AI agent) calls these tools with structured inputs and receives typed JSON responses

In QA this means an agent can request test execution from a CI tool, read telemetry and logs, analyze failures, and open a Jira defect, all through one protocol instead of maintaining dozens of brittle integrations.

Immediate implications:

Context-rich automation: AI can reason over real telemetry, not just static specifications

Unified orchestration: The same protocol links test authoring, execution, triage, and reporting

Reduced connector debt: Once a QA tool exposes MCP, every model client can use it without custom APIs

The real question isn't whether MCP works, Anthropic and partners have proven the technical feasibility. The question is whether your organization can execute the transformation without creating new security gaps or vendor lock-in worse than what you're trying to escape.

Should teams enhance existing tools or adopt AI-native platforms?

Enhancing existing stacks preserves stability and lowers cost; adopting AI-native platforms accelerates innovation but raises migration and security complexity, yet most enterprises overlook the third path of open-source orchestration that delivers control without platform lock-in.

Enhancing existing tools

Keeps CI/CD and test suites intact, ideal when governance requirements or legacy investment is high. Critical limitation: Tools not built for live model context deliver limited AI leverage. You're bolting intelligence onto architecture designed for deterministic scripts.

Adopting AI-native platforms

Vendors like Applitools Ultrafast Grid, Mabl, Testim, and Functionize embed LLM-driven self-healing and analytics.

Rapid onboarding with rich dashboards and vendor-managed infrastructure. Trade-off: High SaaS cost ($50K-$500K+ annually depending on scale) and limited extensibility. You get what the vendor builds, on their timeline.

Emerging third path: Playwright + MCP open-source stack

Instead of buying a platform, teams can connect Playwright (for browser/UI automation) through open-source MCP servers like executeautomation/mcp-playwright or ModelContextProtocol/servers.

Benefits: Low licensing cost (open-source core, pay only for LLM API usage), full control and customizability, easier compliance in self-hosted environments, and no vendor roadmap dependency.

Challenges: Engineering burden for integration and maintenance, need for comprehensive observability, slower time-to-value compared to turnkey platforms, and security hardening is your responsibility.

If your security team won't approve third-party AI platforms touching production data, this may be your only viable path, but be honest about whether you have the engineering capacity to own it.

What are the integration challenges and benefits of MCP adoption for QA?

MCP simplifies tool connectivity but introduces new operational disciplines around identity, observability, and determinism that most QA organizations are unprepared to manage.

Benefits:

Unified connectors: One protocol to reach CI systems, dashboards, and test executors

Structured metadata: Richer assertions and better traceability across the test lifecycle

Faster feedback loops: AI can design, run, and analyze tests within one conversational workflow

Challenges:

Identity & permission sprawl: Every tool call must carry scoped credentials. Are you ready to manage OAuth tokens, service accounts, and permission boundaries for AI agents that operate across 15+ systems? Most organizations discover this complexity after deployment, when an agent accidentally accesses production databases (OWASP AI Security Guidelines).

Non-determinism: LLM decisions need validation layers. When an agent decides to skip a flaky test, who approved that logic? Validation layers add latency and complexity, but skipping them invites chaos.

Observability gap: Logging and auditing MCP exchanges are mandatory for debugging and compliance. If you can't trace which agent made which API call with whose credentials, you're building a forensics nightmare.

Tool maturity: Vendor MCP implementations vary wildly in completeness. Some expose read-only operations; others grant full write access without granular controls. Evaluate carefully, or you'll integrate with half-baked APIs that change with every release.

Cost-Benefit Analysis: Enhancement vs Migration vs Playwright + MCP Hybrid

Each path trades up-front cost for long-term flexibility and maintenance savings; the hybrid open-source route can be cheaper but demands engineering discipline that many teams overestimate.

Comparison Matrix:

Licensing: Enhance Existing (Low) | AI Platform (High recurring) | Playwright + MCP (None/open-source)

Setup & Migration: Enhance Existing (Minimal) | AI Platform (Heavy, asset rebuild) | Playwright + MCP (Medium, infra & integration)

Maintenance Load: Enhance Existing (Moderate) | AI Platform (Low, vendor managed) | Playwright + MCP (High, self-maintained)

Feature Breadth: Enhance Existing (Limited AI capabilities) | AI Platform (Advanced AI dashboards) | Playwright + MCP (Flexible, DIY features)

Governance Control: Enhance Existing (High) | AI Platform (Medium, vendor black box) | Playwright + MCP (Highest, you host)

ROI Horizon: Enhance Existing (6-12 months) | AI Platform (12-24 months) | Playwright + MCP (9-18 months if automated well)

For small to mid-size teams, Playwright + MCP often yields the best cost-to-control ratio: lower spend, full transparency, and acceptable velocity, if engineering resources exist and leadership commits to ongoing infrastructure investment.

The dirty secret vendors won't tell you: platform lock-in isn't just about cost. It's about losing the ability to customize workflows when your testing needs evolve faster than their product roadmap.

How to implement MCP-based QA strategies for different team sizes?

Scale MCP adoption gradually, tailoring architecture depth to team capability. Rushing to "AI everywhere" without foundational discipline creates more problems than manual testing ever did.

Small teams (1-10 engineers):

Start with a Playwright + MCP pilot exposing only safe read-only operations. Goals: AI-generated smoke tests, console-log triage, experiment with prompt templates. Guardrails: Manual approvals for any test execution, ephemeral API keys with 24-hour expiration, full logging of every MCP interaction. Timeline: 4-8 weeks for pilot; measure time saved on test creation and maintenance.

Mid-size teams (10-50 engineers):

Combine existing CI/CD with MCP servers for test orchestration. Add capabilities: AI-assisted test maintenance (self-healing locators, flaky test analysis), automated triage with human-in-the-loop approvals, integration with existing defect tracking. Security hardening: SSO/OIDC integration for all MCP clients, role-based access control (RBAC) for agent permissions, comprehensive audit logging with SIEM integration. Timeline: 3-6 months for production rollout.

Large enterprises (50+ engineers):

Build a QA Platform as a Service exposing standardized MCP endpoints. Enterprise requirements: Formalize SBOM (Software Bill of Materials) for all MCP servers, implement artifact signing and verification pipelines, governance boards to approve agent behaviors and data flows, dedicated platform team for MCP infrastructure. Compliance alignment: GDPR, SOC2, ISO 27001 controls mapped to MCP operations, data-classification rules preventing PII exposure, regular penetration testing of MCP endpoints. Timeline: 9-18 months for enterprise-wide deployment.

Vendor Landscape: Commercial vs Open-Source MCP Ecosystem

The landscape is bifurcating with enterprise vendors layering AI atop legacy tools, and an open-source movement embedding MCP into developer-friendly frameworks, but neither path is risk-free.

Enterprise AI Testing Platforms (Mabl, Applitools, Testim, Functionize): Robust analytics, managed infrastructure, SLAs. Cautions: High cost, limited extensibility, vendor roadmap dependency.

Traditional Vendors Adding AI (Tricentis, Katalon): Familiarity, deep CI integration. Cautions: Shallow AI features initially; playing catch-up to AI-native competitors.

Open-Source + MCP Frameworks (Playwright MCP, Cypress Agents): Flexibility, transparency, cost-efficiency. Cautions: Requires DevOps ownership; community support varies; security hardening needed.

Infrastructure Providers (Anthropic, OpenAI): Managed LLM connectivity, evolving standards. Cautions: Early-stage standards, identity fragmentation, pricing unpredictability.

Critical insight: Traditional QA vendors are retrofitting AI onto architectures built for deterministic workflows. AI-native platforms start with the right assumptions but lock you into their ecosystem. Open-source gives you freedom and risk in equal measure.

Decision Framework for Selecting a QA Tooling Path

Use a structured matrix balancing business impact against integration and governance risk. Intuition and vendor pitches are not strategies.

Step-by-step framework:

1. Inventory current state: List all test tools, assets, and data sensitivity classifications

2. Value scoring: Estimate benefits: velocity gains, coverage improvements, MTTR (Mean Time To Resolution) reduction

3. Risk scoring: Assess security exposure, compliance requirements, vendor lock-in probability, and migration effort

4. Plot on a 2×2 matrix: "Value vs Risk" to visualize options

5. Select pilot: Choose option in high-value, low-risk quadrant for initial 90-day trial

6. Define KPIs: Regression suite execution time (target: 30-50% reduction), maintenance hours per week (target: 20-40% reduction), early-defect detection rate (target: 15-25% improvement)

7. Scale decision: Only proceed to full adoption after measurable wins AND passed security audit

Don't let vendor demos or conference hype drive your decision. If you can't articulate the specific problem MCP solves for your organization with dollar amounts and timeline estimates, you're not ready to adopt.

Implementation Strategies: Full AI Platform vs Playwright + MCP Open Stack

Both paths can coexist. The platform route buys maturity and speed; the open-stack route buys control and affordability, but mixing them without clear boundaries creates operational chaos.

Strategy A: Full AI Testing Platform Adoption

Deploy vendor platform end-to-end (self-healing, dashboards, analytics). Ideal for: Organizations prioritizing speed-to-capability over customization, teams with limited DevOps capacity, enterprises with budget flexibility ($100K+ annually). Requirements: Data-sharing agreements and security review, budget alignment across fiscal years, executive sponsorship for vendor relationship. Governance approach: Trust but verify vendor security posture. Demand SOC2 reports, penetration test results, and incident response playbooks.

Strategy B: Playwright + MCP Open Stack

Run Playwright browsers and an MCP server in your environment. Implementation: Integrate with internal CI pipelines (GitHub Actions, Jenkins, GitLab CI), connect to hosted LLMs (Anthropic Claude, OpenAI GPT-4) or self-hosted models, build custom dashboards using Grafana or similar.

Advantages: Lower OPEX (operational expenditure), complete data control with no third-party data sharing, full extensibility for custom workflows. Disadvantages: Higher DevOps load (plan for 0.5-1 FTE dedicated to platform maintenance), need for prompt engineering expertise, custom dashboards and observability tooling required. Best fit: Engineering-driven QA teams comfortable maintaining infrastructure and with strong DevOps partnership.

Strategy C: Hybrid Progressive Adoption

Use commercial AI platform for mission-critical regression suites and executive reporting. Use Playwright + MCP for R&D, exploratory testing, or cost-sensitive workloads. Benefits: Fastest learning curve, balanced risk exposure, flexibility to shift investment based on results. Challenge: Managing two parallel systems requires discipline. Clear ownership boundaries and integration points must be documented and enforced.

Security & Compliance in AI QA Tooling

Security becomes protocol-centric. MCP endpoints must be governed like microservices, not treated as "just another testing tool."

Essential security controls:

Identity & Authentication: OIDC/OAuth 2.1 for all MCP clients (OAuth 2.1 Specification), ephemeral credentials with 1-4 hour expiration (no long-lived API keys), just-in-time provisioning issuing credentials only when agent requests access.

Supply Chain Security: Signed binaries (all MCP server artifacts must be cryptographically signed), SBOM verification maintaining Software Bill of Materials scanning for vulnerabilities, dependency pinning locking to specific versions verifying checksums on every deployment.

Data Protection: Classification rules blocking PII from outbound MCP calls using DLP policies, network segmentation restricting MCP server outbound access to approved endpoints only, encryption in transit with TLS 1.3 for all MCP communications.

Observability & Forensics: Comprehensive logging with every MCP interaction tied to human or service identity, SIEM integration feeding logs to security information and event management systems, audit trails providing immutable records of agent decisions and actions.

Compliance Mapping: GDPR (right to erasure, data minimization, purpose limitation), ISO 27001/SOC2 (access controls, change management, incident response), industry-specific regulations (HIPAA for healthcare, PCI-DSS for payment systems).

Playwright + MCP specific hardening: Isolate browser nodes running Playwright in sandboxed containers or VMs, restrict network access allowing only necessary outbound connections, disable unnecessary features removing unused Playwright APIs.

If your security team doesn't understand MCP identity flows, you're about to hand AI agents the keys to production, and no vendor will tell you this upfront. Schedule security architecture review before pilot kickoff, not after the breach.

How QA Tooling Will Evolve in the Next 2-5 Years

Expect consolidation around MCP-like standards, stronger identity frameworks, and hybrid AI-human QA ecosystems, but fragmentation could persist if competing protocols emerge.

High-confidence predictions (70%+ likelihood):

MCP standardization across major vendors: Top 5 QA platforms will expose MCP endpoints by 2026; competitive pressure will force adoption even by traditional vendors.

Agent orchestration layers: Specialized agent management platforms will emerge to coordinate fleets of test agents, similar to how Kubernetes manages containers.

Autonomous regression suites: AI-generated and maintained test packs will become standard, with human approvals limited to critical business flows.

Medium-confidence predictions (50-70% likelihood):

Open-source enterprise hardening: Playwright MCP and similar projects will add enterprise features (audit logging, RBAC, compliance reporting) as adoption grows.

Regulatory codification: Industry bodies or governments will mandate AI-tool supply-chain audits, similar to current software composition analysis requirements.

Hybrid human-AI workflows: Most organizations will run parallel systems: AI for regression and maintenance, humans for exploratory and risk-based testing.

Wildcard scenarios (30-50% likelihood):

Protocol fragmentation: Microsoft, Google, or AWS could launch competing standards, creating another integration war.

LLM reliability breakthroughs: Improvements in model consistency could eliminate need for human validation layers, accelerating autonomous testing.

Security backlash: High-profile breaches involving AI agents could trigger regulatory crackdown and slow adoption.

Strategic implication: Don't bet your entire QA transformation on MCP being the final answer. Build flexibility into your architecture, abstraction layers that let you swap protocols without rebuilding your entire testing infrastructure.

Gradual vs Wholesale Adoption: Which Path Delivers More Value?

Phased rollout wins on stability and trust; wholesale migration only pays off with strong ROI evidence and executive mandate, but most organizations lack the discipline for either.

Gradual Adoption Plan (Recommended)

Phase 0: Discovery (4-6 weeks): Inventory all test tools and classify data sensitivity, identify quick-win use cases with low security risk, establish baseline metrics (test creation time, maintenance overhead, defect detection rate).

Phase 1: Read-Only Pilot (8-12 weeks): Deploy MCP server exposing only read operations (log analysis, test coverage reports), AI suggests tests but doesn't execute them, human review and approval for all suggestions, measure how often suggestions are useful and time saved.

Phase 2: Controlled Execution (12-16 weeks): Connect Playwright executor for non-production smoke flows, require dual approvals for test execution, implement comprehensive logging and audit trails, measure execution time reduction and false positive rate.

Phase 3: Security Hardening (8-12 weeks): Add identity layer (SSO/OIDC), implement ephemeral credentials, deploy signed artifacts and SBOM verification, pass security audit before proceeding.

Phase 4: Production Scale (ongoing): Expand coverage incrementally (one application domain per quarter), introduce autonomous triage with human oversight, continuously monitor for drift, security anomalies, and ROI.

Wholesale Adoption Trigger

Proceed with full platform replacement ONLY if all conditions are met: ≥40% of existing test assets are already compatible with AI-assisted workflows, ROI model shows <18-month payback period with conservative assumptions, governance framework ready (SOC2/ISO certified processes), executive sponsorship with multi-year budget commitment, security and compliance teams have signed off, vendor selection completed with contractual SLAs.

Reality check: Fewer than 10% of organizations meet all these criteria. If you're pushing for wholesale adoption without these foundations, you're setting up for failure and will be rebuilding your testing infrastructure in 24 months.

Practical Recommendations for QA Architects and Engineering Leaders

Treat MCP as infrastructure, not magic. Pilot early, secure deeply, measure relentlessly, and maintain the discipline to shut down failed experiments.

Immediate actions (next 30 days):

Run a 90-day pilot comparing vendor AI platform and Playwright + MCP side-by-side on the same application. Establish AI QA governance board including representatives from Security, Legal, Engineering, and QA leadership. Demand transparency from vendors requesting MCP roadmaps, signed artifacts, and incident response plans. Invest in skills development allocating training budget for prompt engineering, AI agent safety, and observability tools.

Strategic commitments (next 6-12 months):

Quantify ROI religiously tracking maintenance hours saved, defect detection speed, cost per test run. If you can't measure it, you can't manage it. Plan for hybrid future assuming coexistence of commercial AI platforms and open-source MCP automation will be the enterprise norm. Build abstraction layers not tightly coupling to any single protocol or vendor, creating adapter interfaces that let you swap implementations. Prepare for security incidents having runbooks ready for credential exposure, agent misbehavior, and supply-chain compromise.

Cultural shifts required:

Embrace uncertainty: AI testing introduces non-determinism. Your team must learn to work with probabilistic outcomes.

Shift from gatekeeping to governance: QA's role evolves from manually running tests to governing agent behavior.

Invest in observability: You need 10x better logging and monitoring when AI agents operate your quality pipeline.

The hard question nobody wants to answer: If your current QA team struggles with basic CI/CD pipelines, adding AI agents will amplify chaos, not reduce it. Fix your operational discipline first. MCP won't magically create the maturity you're missing.

Closing: A Strategic Verdict

MCP is not another shiny object. It's the architectural pivot that will unify AI-assisted testing. But transformation succeeds only when executed deliberately, with evidence-driven pilots, clear governance, and willingness to mix open and commercial solutions.

The quality assurance function stands at an inflection point. The question isn't whether AI will reshape testing, it already is. The question is whether your organization will lead that transformation or spend years recovering from botched adoption attempts.

MCP offers a genuine path forward: standardized connectivity that can break the integration hell currently consuming 30-40% of QA engineering time. But it's not a silver bullet. It's infrastructure, powerful when deployed with discipline, catastrophic when rushed.

Three truths to guide your strategy:

Start small, scale deliberately: Every successful MCP adoption began with constrained pilots, clear metrics, and the courage to shut down failed experiments.

Security cannot be an afterthought: Organizations that treat MCP endpoints like production APIs from day one avoid the breaches that tank credibility.

Hybrid is the reality: The future isn't "commercial platform or open-source", it's strategic deployment of both, based on workload characteristics and organizational capabilities.

Your competitors are already experimenting. The leaders who move first with discipline, not recklessness, will build the quality systems that define the next decade. The laggards will spend years paying down technical debt from rushed adoption or paralyzed by analysis.

The clock is running. What's your next move?